Wenn Menschen Uruguay erwähnen, denken Fußballfans sofort an Namen wie Luis Suárez, Diego Forlán und Edinson Cavani. Für Nicht-Fußballfans mag das Land jedoch unbekannt klingen. Doch dieses südamerikanische Juwel hat es auf die Liste der “Top 10 der besten Reiseländer” von Lonely Planet geschafft.

Im Vergleich zu seinen südamerikanischen Nachbarn bietet Uruguay etwas Einzigartiges. Es vereint die Herzlichkeit Brasiliens mit der Lebensqualität Argentiniens und ist damit ein Favorit unter der südamerikanischen Elite. Seine atemberaubenden Naturlandschaften, charmante Küste, exzellente Luftqualität und stabile Sicherheit brachten ihm den Titel “Schweiz Südamerikas” ein. Aufgrund seiner juwelenhaften Schönheit und reichen Amethystvorkommen wird es liebevoll “Diamantenland” genannt.

Uruguays Strände und entspannte Atmosphäre

Uruguay beherbergt einige der besten Strände Südamerikas, die jährlich Surfer aus aller Welt anlocken. Hier herrscht eine unbeschwerte, lässige Stimmung – europäische Schönheiten genießen die Freiheit einer “Nacktstadt”. Gerüchten zufolge soll sogar der ehemalige US-Präsident George W. Bush hier ein paar Immobilien gekauft haben!

Wer einen gemächlichen “Rentner”-Lifestyle mag, findet in Uruguay den perfekten Ort. Mit der Kamera in der Hand, einem Mate-Tee in der anderen und einem Spaziergang durch die Straßen wird der Speicherplatz schnell mit malerischen Ansichten gefüllt sein.

Uruguays Karneval: Ein kulturelles Spektakel

Die lebendigste Zeit des Jahres ist der Karneval im Februar. Die Straßen erwachen zum Rhythmus von Samba, wilden afrikanischen Trommeln und spektakulären Umzügen – eine einzigartige Mischung aus südamerikanischen und europäischen Einflüssen.

Fußball, Strände und Spaß

Das Centenario-Stadion in Montevideo hat historische Bedeutung: Es war Austragungsort der ersten FIFA-Weltmeisterschaft 1930, die Uruguay gewann. Heute wird das Stadion noch häufig genutzt. Das Museo del Fútbol im Inneren zeigt Trophäen und Memorabilien, die das fußballerische Erbe des Landes dokumentieren. Probieren Sie anschließend unbedingt einen “Suárez-Burger” oder “Cavani-Burger” – beides lokale Spezialitäten.

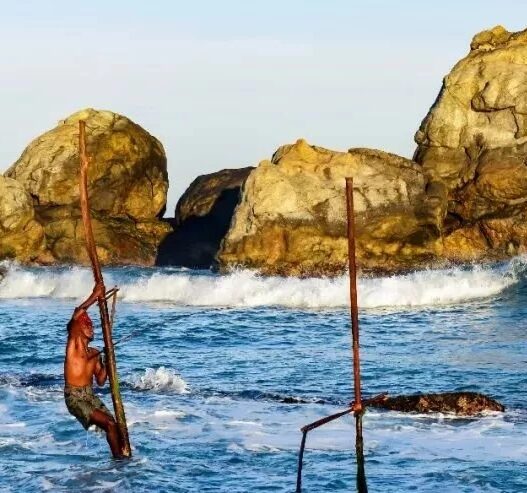

Im Sommer strömen Menschen aus aller Welt hierher. Die Strände sind voller Surfer, und die sanften Winde bieten für jedes Können die passenden Bedingungen. In den Monaten November und Dezember machen die lokalen Musikdarbietungen und köstlichen Meeresfrüchterestaurants diesen Ort noch verlockender.

Eine der berühmtesten Sehenswürdigkeiten ist die Casapueblo, ein einzigartiges Hotel und Museum, das von einem Künstler erbaut wurde, der einst dort lebte. Auf den Klippen mit Blick aufs Meer thronend, machen ihr ungewöhnliches Design und die atemberaubende Aussicht sie zum Traumziel für Fotografen. Nach dem Tod des Künstlers wurde sie in ein Museum umgewandelt, und heute können Besucher in den Zimmern übernachten, die einen wahrhaft künstlerischen Flair besitzen.

Der längste Karneval Südamerikas

Uruguays Karneval erstreckt sich von Januar bis März und dauert bis zu zwei Monate. Dieses ausgedehnte Fest vereint brasilianischen Samba, argentinischen Tango und europäische Trommelrhythmen zu einer lebendigen Atmosphäre, wie man sie sonst nirgendwo auf der Welt findet.

Montevideo: Europäisches Flair in Südamerika

Montevideo, eine der von Peking am weitesten entfernten Hauptstädte der Welt, besticht durch eine charmante Mischung aus spanischen und europäischen Einflüssen. Das Herz der Stadt ist die Plaza Independencia mit dem Denkmal für den “Vater der Unabhängigkeit”, Artigas. Eines der markantesten Wahrzeichen Montevideos ist der Palacio Salvo, ein 1928 errichtetes 29-stöckiges Gebäude. Heute beherbergt es Büros und Wohnungen und ist ein beliebter Anlaufpunkt für Touristen.

Ein Großteil des Charmes Montevideos konzentriert sich auf seine Altstadt. Ein Spaziergang durch ihre Kopfsteinpflasterstraßen vermittelt ein authentisches Gefühl für die einzigartige Kultur der Stadt. In diesem historischen Viertel findet man handgefertigten Schmuck, bunte Obststände und sogar Beatles-Themenbars. Noch interessanter werden die Straßen durch Wandgemälde und Graffiti, die die Geschichte der Stadt in lebendigen Farben erzählen.

Chivito & Chorizo: Lokale Spezialitäten

Uruguays Version eines Rindfleischsandwiches, der Chivito, ist ein Muss. Obwohl er wie ein Burger aussieht, ist er viel mehr als das. Gefüllt mit Steak, Ei, Käse, Speck, Oliven, Salat und verschiedenen Saucen bietet er eine perfekte Geschmackskombination. Ein weiteres beliebtes Gericht ist der Chorizo, eine oft gegrillte Wurst, die typischerweise im Sandwich serviert wird. Man findet sie leicht an Straßenständen und kann sie mit Lieblingsgewürzen oder Toppings verfeinern.

Mate-Tee: Uruguays Nationalgetränk

Eine Uruguay-Reise ist unvollständig ohne Mate-Tee, das Nationalgetränk. Es ist üblich, Einheimische mit einer Thermoskanne heißem Wasser und einer Mate-Tasse in der Hand zu sehen. Selbst der französische Fußballspieler Antoine Griezmann hat diese Gewohnheit übernommen, nachdem ihn seine uruguayischen Teamkollegen damit vertraut gemacht haben.