O que torna a vida agradável? Para alguns, é o prazer simples de uma refeição quente compartilhada com entes queridos. Para outros, é a emoção da aventura ou a calma de uma reflexão tranquila. Nossas ideias de prazer não são universais—elas são moldadas pelas culturas em que crescemos e pelos ambientes em que vivemos. Desde paisagens urbanas movimentadas até ambientes rurais tranquilos, desde filosofias orientais até ideais ocidentais, a forma como percebemos e buscamos a alegria varia dramaticamente em todo o mundo.

Neste blog, vamos mergulhar em como as culturas e ambientes influenciam nossas noções de prazer, explorar exemplos do mundo real e discutir por que entender essas diferenças enriquece nossas perspectivas.

O Papel da Cultura na Formação do Prazer

Tradições e Celebrações

As tradições culturais desempenham um papel significativo na definição do que as pessoas consideram agradável. Por exemplo, na Índia, festivais como o Diwali trazem alegria por meio de reuniões comunitárias, decorações vibrantes e o compartilhamento de doces. Em contraste, as culturas escandinavas abraçam o conceito de hygge, uma ideia de aconchego e conforto, encontrando felicidade em momentos simples como saborear um chocolate quente ao lado de uma lareira.

Comida e Práticas Culinárias

A comida é uma fonte universal de prazer, mas o que comemos e como a comemos varia muito. No Japão, a arte de saborear uma refeição de sushi meticulosamente preparada é tanto sobre atenção plena quanto sobre o sabor. Enquanto isso, na Itália, refeições longas e descontraídas cheias de risadas e conversas destacam o aspecto social da alimentação.

Fundamentos Filosóficos

As filosofias culturais influenciam o que valorizamos como prazer. Por exemplo, filosofias orientais como o budismo frequentemente enfatizam a paz interior e a contentamento, encontrando alegria na simplicidade e na atenção plena. As culturas ocidentais, por outro lado, podem tender para a realização e o indulgência, derivando prazer do sucesso pessoal e dos confortos materiais.

Fatores Ambientais e Prazer

Paisagens Naturais

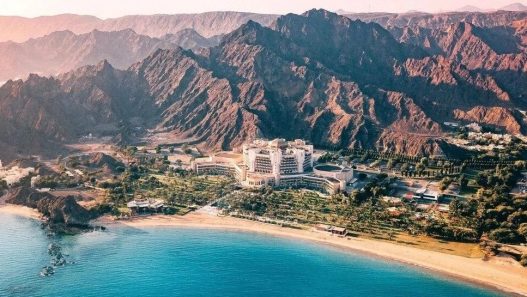

Onde vivemos impacta profundamente nossas fontes de alegria. Pessoas que vivem perto de montanhas podem encontrar felicidade em caminhadas e exploração de terrenos acidentados, enquanto aquelas que vivem perto do oceano podem desfrutar de surf, passeios na praia ou simplesmente observar as ondas.

Ambientes Urbanos vs. Rurais

Os moradores das cidades frequentemente buscam prazer em atividades de ritmo acelerado, como vida noturna, jantares fora ou participação em eventos culturais. Em contraste, ambientes rurais podem fomentar o prazer em atividades mais lentas e baseadas na natureza, como agricultura, observação de estrelas ou longas caminhadas por campos abertos.

Clima e Estações

O clima também desempenha um papel. Os países nórdicos, com seus longos invernos, frequentemente celebram os fugazes meses de verão com festivais e reuniões ao ar livre. Enquanto isso, as regiões tropicais podem abraçar atividades aquáticas ou passatempos à sombra para escapar do calor.

Estudos de Caso: Alegria Através das Culturas

Japão: A Arte do Hanami

No Japão, o hanami—a tradição de observar as flores de cerejeira—é uma atividade querida. Famílias e amigos se reúnem sob as cerejeiras em flor para desfrutar de piqueniques, celebrando a beleza transitória da natureza. Essa prática cultural enfatiza a atenção plena e o viver o momento.

Espanha: O Espírito de Festa

A Espanha é conhecida por seus festivais vibrantes, como La Tomatina e a Corrida de Touros. Esses eventos estão profundamente enraizados na cultura espanhola e unem as comunidades por meio de emoções compartilhadas e tradições.

África: Filosofia Ubuntu

Em muitas culturas africanas, o conceito de Ubuntu—“Eu sou porque nós somos”—molda o prazer. A alegria é encontrada na vida comunitária, em experiências compartilhadas e no apoio mútuo, destacando a importância da conexão humana.

4. EUA: A Busca pela Felicidade Individual

Nos Estados Unidos, o prazer está frequentemente ligado a conquistas individuais, como alcançar metas de carreira, hobbies pessoais ou experiências de viagem únicas. Isso reflete o valor cultural colocado na independência e na realização pessoal.

Como o Entendimento Intercultural Melhora o Prazer

Entender como diferentes culturas e ambientes moldam o prazer pode:

- Ampliar Nossos Horizontes: Aprender sobre as alegrias de outras culturas pode nos inspirar a experimentar novas atividades, comidas ou práticas.

- Promover Empatia: Reconhecer perspectivas diversas nos ajuda a apreciar por que as pessoas valorizam diferentes formas de prazer.

- Enriquecer Nossas Vidas: Incorporar elementos de outras culturas em nossas vidas pode tornar nossas experiências mais gratificantes.

Formas Práticas de Explorar Noções Diversas de Prazer

Viagens e Exploração

Viajar para novos lugares nos expõe a diferentes culturas e ambientes. Seja participando de um festival local ou simplesmente observando a vida cotidiana, viajar pode transformar nossa visão de prazer.

Intercâmbios Culturais

Participar de intercâmbios culturais, como hospedar convidados internacionais ou participar de comunidades globais online, nos permite compartilhar e aprender sobre diferentes fontes de alegria.

Adotando Novas Práticas

Tente adotar tradições de outras culturas, como praticar atenção plena, celebrar um novo feriado ou cozinhar um prato internacional. Esses pequenos passos podem introduzir novos prazeres em sua vida.

Conectando-se com a Natureza

Explore seu ambiente local e abrace atividades adequadas a ele, seja caminhando em um parque próximo, remando em um rio ou simplesmente observando o nascer do sol. Conectar-se com a beleza da natureza é uma fonte universal de alegria.

O Papel da Tecnologia em Reduzir as Lacunas de Prazer

No mundo interconectado de hoje, a tecnologia nos permite experimentar as alegrias de outras culturas sem sair de casa. A realidade virtual, por exemplo, nos permite “visitar” lugares distantes, enquanto as plataformas de mídia social nos conectam com pessoas que compartilham diferentes perspectivas. Essas ferramentas podem nos ajudar a ampliar nossa compreensão do prazer e enriquecer nossas vidas. Leia Mais>>>>

Considerações Finais

Nossas noções de prazer são tão diversas quanto as culturas e ambientes que as moldam. Ao explorar essas diferenças, podemos aprender a apreciar as muitas formas de alegria da vida e até mesmo incorporar novos elementos em nossas próprias vidas. Seja saboreando o prazer simples de uma refeição caseira ou abraçando a emoção de uma aventura, entender e celebrar fontes diversas de prazer torna a vida mais rica e gratificante.

Então, da próxima vez que você estiver buscando felicidade, considere sair da sua zona de conforto e abraçar uma nova perspectiva. Você pode descobrir uma maneira totalmente nova de desfrutar a vida.