Haben Sie es satt, in langen Schlangen für Bubble Tea zu warten? Keine Lust, jeden Tag Milchtee zu trinken? Zu heiß, um nach draußen zu gehen? Die erfrischende Kombination aus Gemüse und Obst ist da, um den fettigen, glühenden Eindruck des Sommers wegzuspülen! Wenn Sie keine faserigen Gemüse essen möchten, sie aber wie ein Getränk genießen wollen, dürfen Sie diese hausgemachten Obst- und Gemüsesaft-Geheimnisse nicht verpassen.

Warum brauchen wir Obst- und Gemüsesäfte?

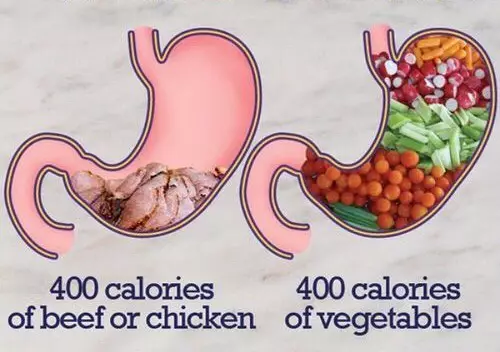

Obst- und Gemüsesäfte enthalten reichlich Vitamine aus Früchten und Nährstoffe aus Gemüse, die direkt die Darmbewegung fördern und die Verdauung verbessern. Zudem liefern Säfte weniger Kalorien bei ausgewogener Nährstoffversorgung, was sie bei Vegetariern und (heimlichen) Gewichtsmanagern beliebt macht. Heute gelten Säfte sogar als Heilmittel für diverse Subgesundheitszustände und Krankheiten.

Der ultimative Anfängerleitfaden für Obst- und Gemüsesäfte

5-Schritte-Perfekte-Entsaftungsformel

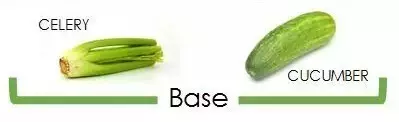

1、Basis wählen: Wie Sellerie oder Gurke.

2、Blattgemüse hinzufügen: Wie Kopfsalat, Koriander, Grünkohl oder Spinat.

3、Aromatisches Obst/Gemüse hinzufügen: Wie Apfel, Rote Bete, Ananas, Karotte oder Birne.

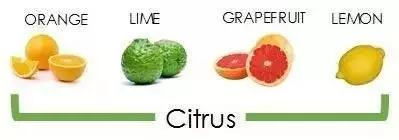

4、Zitrusfrucht für erfrischende Säure: Wie Orange, Limette, Grapefruit oder Zitrone.

5、Spezialaroma (optional): Wie Ingwer, Weizengras, Chilipulver oder andere Kräuter.

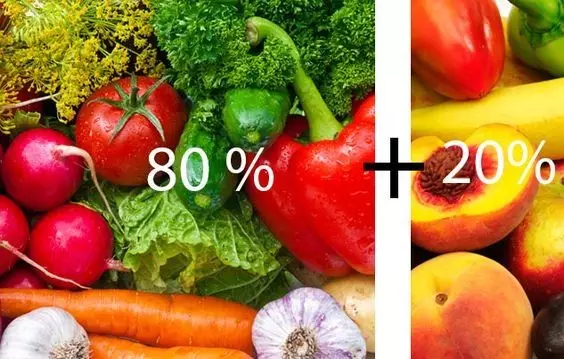

Die 80/20-Regel beim Entsaften

Die australische Ernährungswissenschaftlerin Claire Georgiou entwickelte die 80/20-Regel zur besseren Ernährungskontrolle: Das Verhältnis von Obst zu Gemüse sollte etwa 20% zu 80% betragen. Da die meisten Früchte viel Zucker enthalten (Ausnahmen wie Rote Bete/Karotten), sollte ihr Anteil reduziert werden. Gemüse liefert dagegen direkte Nährstoffe für effektive Körperaufnahme.

Claire Georgiou empfiehlt täglich Säfte verschiedener Farben, da jede Farbe spezifische Nährstoffe repräsentiert. Ihre Liste umfasst meist 2-5 Säfte als Regenbogensaft (mindestens drei grüne Gemüse). Claires Gesundheitsmantra: “Iss täglich einen Regenbogen” für ausreichende Mikronährstoffe, Antioxidantien und Pflanzenstoffe.

Beispiele der 80/20-Regel

- 1、Erstes Glas reiner Fruchtsaft → nächste vier Gläser Gemüsesäfte (2-3 vorwiegend grün)

- 2、Erste zwei Gläser 50% Obst/Gemüse → nächste zwei reine Gemüsesäfte

- 3、Jedes Glas mit sechs Zutaten → mindestens vier Gemüse

Flexibilität ist entscheidend. Halten Sie ein grobes 80/20-Verhältnis (Gemüse/Obst) mit täglich 50% grünem Saftanteil ein, um Zucker/Kohlenhydrate optimal zu verarbeiten.

Vorsichtsmaßnahmen

Frischsäfte sollten nüchtern 30 Minuten vor Mahlzeiten getrunken werden, wenn das Verdauungssystem Nährstoffe optimal aufnimmt. Sofort nach dem Pressen verzehren und lichtgeschützt lagern. Vor dem Entsaften Gesundheitszustand prüfen: Bei Mundgeschwüren, Diabetes oder verwandten Erkrankungen vorsichtig konsumieren.

5 Säfte gegen Sommerbeschwerden!

Detox-Grünsaft

Zutaten

- Mittelgroße Gurke……1

- Mittelgroße Grünkohlblätter……4

- Koriander (mit Stängeln/Blättern)……2 Zweige

- Großer Apfel……1

- Ingwer (4 cm)……1,5 Stücke

- Geschälte Limette……1-2

- Mittelgroße Selleriestangen……3

Zubereitung

- Zutaten hacken/schneiden und in Entsafter geben

- Absieben und genießen!

Antioxidativer Rote-Bete-Saft

Zutaten

- Apfel……1

- Zitrone……1

- Sellerie……2 Stangen

- Rote Bete (mit Blättern)……1

- Spinat……1 Handvoll

Zubereitung

- Alle Zutaten waschen, Zitrone schälen

- Rote Bete klein schneiden, zuletzt entsaften

- Sofort trinken!

Süß-saurer Sommersaft

Zutaten

- Birnen……3

- Basilikum (mit Stängeln/Blättern)……2 Zweige

- Zitronen……2

- Sellerie……4 Stangen

- Karotten……2

- Gurke……1

Zubereitung

- Zutaten waschen, Zitrone schälen

- In aufgeführter Reihenfolge entsaften

- Umrühren und genießen!

Zuckerarmer Gesundheitssaft

Zutaten

- Preiselbeeren……100g

- Limette……1

- Apfel……1

- Brokkoli……½

- Grünkohl……½

- Rosmarin……2 Zweige

- Minze……2 Zweige

Zubereitung

- Alle Zutaten waschen, Limette schälen

- Für Entsafter zerkleinern

- Entsaften und optional Eis hinzufügen

Samba-Passion-Saft

Zutaten

- Karotten……2

- Knoblauch……2 Zehen

- Rote Paprika……2

- Orangen……2

- Banane……1

- Ingwer……1 Stück

Zubereitung

- Zutaten waschen, Zitrone schälen

- Zerkleinert in Entsafter geben

- Glasrand mit Salz dekorieren!

Fazit

Obst- und Gemüsesäfte sind nicht nur gesunde Getränke, sondern auch sommerliche Erfrischungen. Meistern Sie diese Entsaftungstipps und die 80/20-Regel, um Sommerbeschwerden zu besiegen und täglich ein vitales, gesundes Leben zu genießen!