Mejor época para visitar Dinamarca

Dinamarca, con su clima marítimo templado, ofrece inviernos suaves y veranos frescos. Gracias a las corrientes cálidas del Atlántico, nunca hace demasiado frío, lo que la convierte en un destino ideal durante todo el año. Esto es lo que puedes esperar en cada temporada:

Primavera (marzo–mayo): Las flores en plena floración y los parques vibrantes la convierten en la época perfecta para paseos al aire libre y visitas a jardines.

Verano (junio–agosto): Temporada alta de viajes con días soleados, festivales al aire libre y escapadas a la playa.

Otoño (septiembre–noviembre): Una temporada pintoresca con follaje dorado, eventos culturales y clima templado.

Invierno (diciembre–febrero): Una época mágica con mercados navideños festivos, cafés acogedores y deportes de invierno.

Consejo para el equipaje: Las capas son clave. Se recomienda una chaqueta impermeable durante todo el año debido a las lluvias ocasionales.

Top 5 destinos imperdibles en Dinamarca

1. Nyhavn – El puerto más pintoresco de Copenhague

Uno de los lugares más famosos de Copenhague, Nyhavn es un paseo marítimo colorido con edificios históricos, bares animados y cafés encantadores. Perfecto para un paseo al atardecer o un recorrido por los canales.

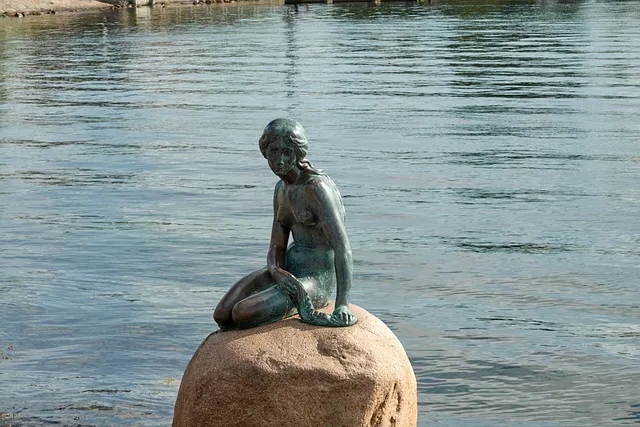

2. La Sirenita – El icónico monumento danés

Inspirada en el amado cuento de hadas de Hans Christian Andersen, la estatua de la Sirenita se encuentra en el puerto, atrayendo a millones de visitantes cada año.

3. Jardines de Tivoli – El parque de atracciones más antiguo del mundo

Inaugurado en 1843, los Jardines de Tivoli son uno de los parques temáticos más antiguos del mundo, que ofrece montañas rusas vintage, espectáculos en vivo y jardines encantadores.

4. Castillo de Kronborg – El hogar de Hamlet de Shakespeare

Declarado Patrimonio de la Humanidad por la UNESCO, el Castillo de Kronborg es conocido por ser el escenario de Hamlet. Sus salones grandiosos, murallas medievales y vistas impresionantes al mar lo convierten en una visita obligada.

5. Museo Nacional de Dinamarca – Un viaje por la historia

El Museo Nacional de Dinamarca alberga un tesoro de artefactos vikingos, colecciones reales y exposiciones que recorren la rica historia del país.

Delicias culinarias danesas

Smørrebrød – El sándwich abierto de Dinamarca

Un elemento básico de la cocina danesa, el Smørrebrød consiste en pan de centeno con mantequilla cubierto de pescado, carnes, queso y guarniciones frescas.

Wienerbrød – El famoso pastel danés

Conocido internacionalmente como pastel danés, el Wienerbrød es un manjar hojaldrado y mantecoso, a menudo relleno de crema pastelera, chocolate o frutas.

Arenque en escabeche – Un clásico nórdico

Un favorito escandinavo, el arenque en escabeche se sirve con pan de centeno y mostaza, y se disfruta comúnmente durante las celebraciones tradicionales danesas.

Cerveza Carlsberg – La herencia cervecera de Dinamarca

Dinamarca es el hogar de Carlsberg, una de las cervecerías más famosas del mundo, conocida por sus cervezas lager refrescantes y su rica historia cervecera.