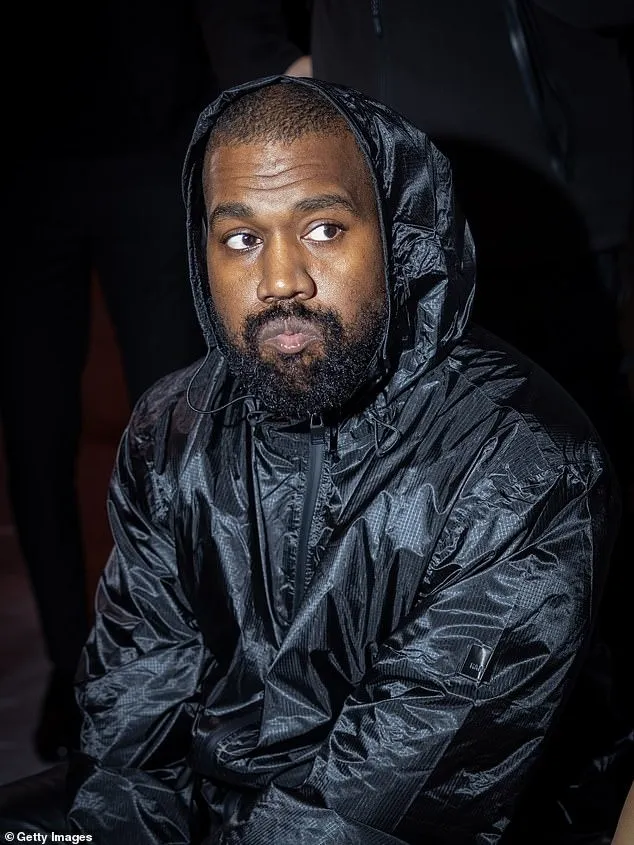

사과의 일주일

지난주,카니예 웨스트의 홍보팀은 확실히 바빴습니다. 월요일부터 그의 사과가 북미 연예계 헤드라인을 장식했다.

그는 다음에서 전체 페이지 광고를 구입했습니다.월스트리트저널과거의 악의적 발언에 대해 사과드립니다.

정신 건강에 대한 실수의 원인

편지에서 Kanye는 과거의 모든 실수를 정신 질환 때문이라고 비난했습니다.

그는 2002년 교통사고로 뇌손상이 발생했다고 밝혔다. 의사들은 그의 눈에 보이는 상처(골절과 부기)에 초점을 맞추었지만 그의 뇌 외상은 간과했습니다.

그 사고는 그에게 영향을 미쳤다.전두엽 피질, 트리거양극성 I 장애.

Kanye는 장애로 인해 생성된 방어 메커니즘인 거부를 설명했습니다.

“조증 에피소드 중에는 자신이 아프다는 사실을 깨닫지 못합니다. 다른 사람들이 과민반응을 하고 있다고 생각할 수도 있습니다. 그 어느 때보다 명료함을 느끼지만 모든 통제력을 상실합니다.”라고 그는 설명했습니다.

그 병은 그에게 도움이 필요하지 않다고 확신시킵니다. 그것은 그로 하여금 강하고, 자신감 있고, 막을 수 없다는 느낌을 갖게 하여 반복되는 실수로 이어졌습니다.

4개월 간의 조울증 에피소드

작년에 Kanye는 4개월간 조울증을 겪었습니다. 때때로 그는 살고자 하는 의지로 어려움을 겪었습니다.

이제 그는 치료를 시작했고 자신의 실수를 인식하고 자신의 행동을 반성할 기회를 갖게 되었습니다. 이 반성은 그의 공개 사과를 촉발시켰다.

소식통에 따르면 그의 사과는 진심일 수도 있고 부분적으로 사회적으로 거부당했다는 느낌에서 동기를 부여받은 것일 수도 있습니다. 그의 주변에는 “지극히 용납할 수 없는 행동”으로 인해 거리를 두는 사람도 많았다고 한다.

그의 아내의 역할

Kanye의 아내 중 두 명이 그를 지원하는 데 중요한 역할을 했습니다.

킴 카다시안그는 그가 약물 치료 일정을 지키고 의사 약속에 참석하도록 도우려고 노력한 것으로 알려졌습니다.

그러나 Kanye는 종종 저항했습니다. 그는 약이 자신과 다른 느낌을 주고 창의력을 방해한다고 말했습니다.

이 거부는 결혼 생활의 붕괴에 기여했습니다. 그는 갑자기 약 복용을 중단했고 이로 인해 상태가 악화되었고 결국 공개 분열로 이어졌습니다.

비앙카와의 도전

Kanye가 데이트를 시작한 후비앙카 검열관, 그의 상태가 악화되었습니다.

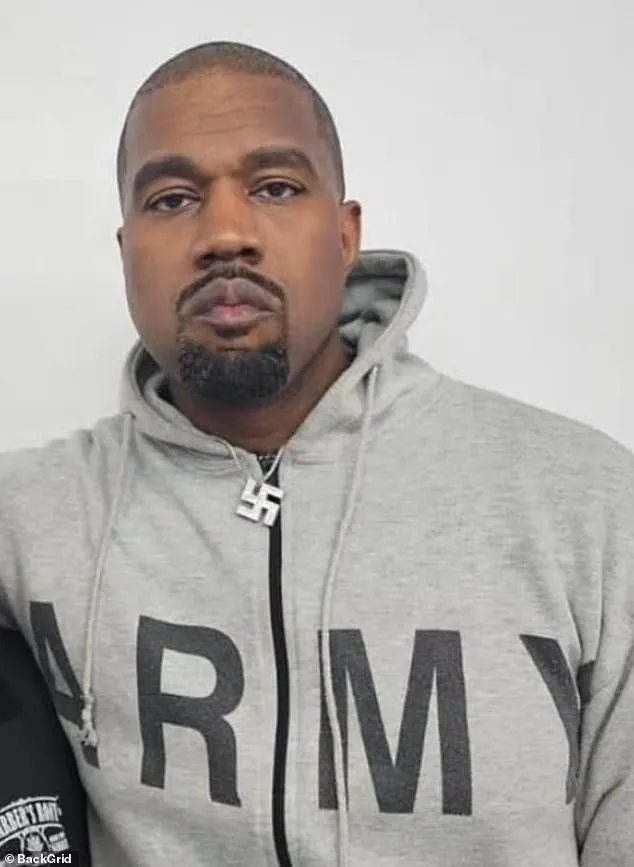

한 친구는 Kanye가 반유대주의적인 발언을 한 후 Bianca가 분노했다고 말했습니다. 그녀는 그가 이런 식으로 관심을 받는 것을 원하지 않았습니다.

에 따르면피플 매거진, Bianca는 Kanye의 생활 방식에 불만을 품고 여러 번 이혼을 고려했습니다.

이어 “정신 상태가 불안정할 때 극적인 사건을 일으키고, 이는 지속적인 결과를 가져오며, 일단 안정되면 자신이 가족과 친구, 그리고 자신에게 끼친 피해를 분명히 인식하게 된다”고 덧붙였다.

비앙카의 지원 및 대행사

어려움에도 불구하고 Bianca는 Kanye를 지원하기로 결정했습니다.

소식통은 그녀가 독립성을 유지했으며 자신의 계획을 가지고 있다고 강조합니다. 그녀는 조종당하지 않았습니다. 사실, 그녀는 관계를 통제했습니다.

그들의 악명 높은 공개 누드 순간은 틀에 얽매이지 않지만 상호적인 역동적인 모습을 강조합니다.

약물 조정 및 회복

Kanye의 4개월 조증 단계가 끝나자 그는 약을 바꿨습니다. 이러한 변화는 심각한 우울증을 유발했습니다.

비앙카는 변화를 알아차리고 그를 재활 센터로 데려갔습니다.스위스.

치료를 통해 그의 정신상태는 안정되었고 부부관계도 좋아졌습니다.

현재 그의 약은 그의 감정을 둔화시키는 것으로 알려졌다. 의 최근 사진로스앤젤레스아직 치료 중일 수도 있다고 합니다.

대중의 회의론

내부자들은 Kanye가 단지 도움이 필요한 사람일 뿐이라고 제안합니다. 아내의 도움으로 그는 더욱 안정적인 삶으로 복귀하고 있다.

그러나 대부분의 온라인 관찰자들은 여전히 회의적입니다.

한 네티즌은 “그의 마지막 앨범은 실패했다. 새 앨범은 필사적인 브랜드 변경 시도처럼 보인다”고 썼다.

어떤 사람들은 그가 여전히 대중의 인식을 조작하고 있다고 주장합니다. 보고서는 그가 작년에 스위스 치료 시설에 실제로 참석했는지에 대해 의문을 제기했습니다.

비평가들은 양극성 장애를 관리한다고 해서 해로운 행동이 정당화되는 것은 아니라고 지적합니다. 이 장애가 있는 많은 사람들은 질병을 비난하지 않고 책임감 있는 삶을 살고 있습니다.

정밀 조사 중인 비앙카

비앙카도 대중의 비판에 직면했다. 일부 평론가들은 그녀의 주요 관심사가 Kanye를 떠나는 것보다 공개 누드라고 농담으로 주장합니다.

이는 대중 인식의 힘과 유명인의 내러티브가 어떻게 왜곡될 수 있는지를 보여줍니다.