The fast progress of enormous language fashions (LLMs) and their growing computational necessities have prompted a urgent want for optimized options to handle reminiscence utilization and inference pace. As fashions like GPT-3, Llama, and different large-scale architectures push the bounds of GPU capability, environment friendly {hardware} utilization turns into essential. Excessive reminiscence necessities, sluggish token technology, and limitations in reminiscence bandwidth have all contributed to important efficiency bottlenecks. These issues are significantly noticeable when deploying LLMs on NVIDIA Hopper GPUs, as balancing reminiscence utilization and computational pace turns into tougher.

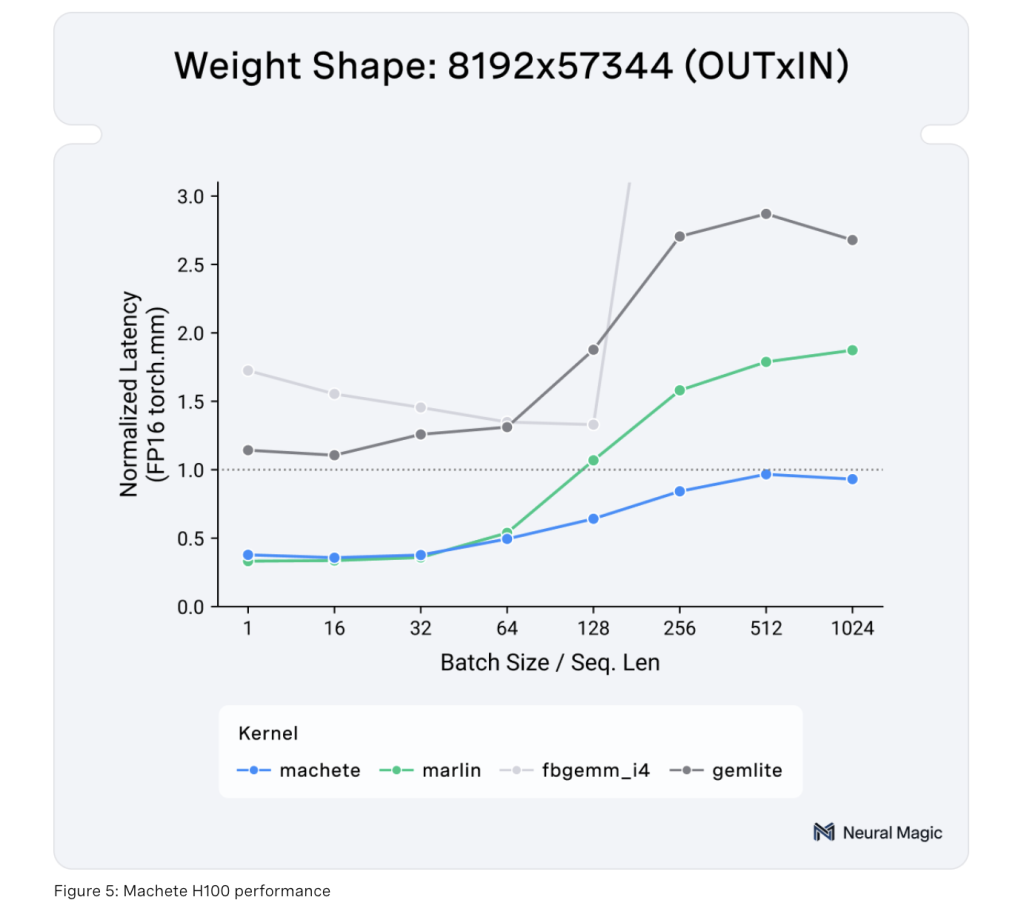

Neural Magic introduces Machete: a brand new mixed-input GEMM kernel for NVIDIA Hopper GPUs, representing a serious development in high-performance LLM inference. Machete makes use of w4a16 mixed-input quantization to drastically scale back reminiscence utilization whereas making certain constant computational efficiency. This progressive method permits Machete to scale back reminiscence necessities by roughly 4x in memory-bound environments. When in comparison with FP16 precision, Machete matches compute-bound efficiency whereas tremendously bettering effectivity for memory-constrained deployments. As LLMs proceed to develop in scope, addressing reminiscence bottlenecks with sensible options like Machete turns into important for enabling smoother, sooner, and extra environment friendly mannequin inference.

Considered one of Machete’s key improvements lies in its technical implementation. Constructed on CUTLASS 3.5.1, Machete leverages the wgmma tensor core directions to beat compute-bound limitations, leading to sooner mannequin inference. It additionally incorporates weight pre-shuffling, which permits for faster-shared reminiscence hundreds, successfully mitigating bottlenecks that usually come up in large-scale LLMs. This weight pre-shuffling mechanism optimizes shared reminiscence by permitting 128-bit hundreds, growing throughput and lowering latency. As well as, Machete has improved upconversion routines that facilitate environment friendly conversion of 4-bit components to 16-bit, maximizing tensor core utilization. Collectively, these improvements make Machete an efficient resolution for bettering LLM efficiency with out the overhead usually related to elevated precision or extra computational prices.

The significance of Machete can’t be overstated, significantly within the context of the rising demand for LLM deployments which might be each reminiscence and compute-efficient. By lowering reminiscence utilization by round fourfold, Machete helps be sure that even the biggest LLMs, akin to Llama 3.1 70B and Llama 3.1 405B, may be run effectively on accessible {hardware}. In testing, Machete achieved notable outcomes, together with a 29% enhance in enter throughput and a 32% sooner output token technology fee for Llama 3.1 70B, with a powerful time-to-first-token (TTFT) of below 250ms on a single H100 GPU. When scaled to a 4xH100 setup, Machete delivered a 42% throughput speedup on Llama 3.1 405B. These outcomes show not solely the numerous efficiency enhance offered by Machete but additionally its capability to scale effectively throughout completely different {hardware} configurations. The assist for upcoming optimizations, akin to w4a8 FP8, AWQ, QQQ, and improved efficiency for low-batch-size operations, additional solidifies Machete’s position in pushing the boundaries of environment friendly LLM deployment.

In conclusion, Machete represents a significant step ahead in optimizing LLM inference on NVIDIA Hopper GPUs. By addressing the vital bottlenecks of reminiscence utilization and bandwidth, Machete has launched a brand new method to managing the calls for of large-scale language fashions. Its mixed-input quantization, technical optimizations, and scalability make it a useful device for bettering mannequin inference effectivity whereas lowering computational prices. The spectacular good points demonstrated on Llama fashions present that Machete is poised to grow to be a key enabler of environment friendly LLM deployments, setting a brand new customary for efficiency in memory-constrained environments. As LLMs proceed to develop in scale and complexity, instruments like Machete shall be important in making certain that these fashions may be deployed effectively, offering sooner and extra dependable outputs with out compromising on high quality.

Try the Particulars. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t overlook to comply with us on Twitter and be a part of our Telegram Channel and LinkedIn Group. If you happen to like our work, you’ll love our e-newsletter.. Don’t Overlook to affix our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Finest Platform for Serving Effective-Tuned Fashions: Predibase Inference Engine (Promoted)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.