In the world of artificial intelligence, many companies are still grappling with whether to take the open-source route. But Alibaba’s tech team is making bold strides, recently releasing their cutting-edge video generation model, WanXiang . This open-source model includes not only the full inference code and weights but also the most flexible open-source license available.

The Challenges in Video Generation

Anyone familiar with video generation models knows that they face several challenges. For instance, most models struggle with accurately rendering complex human movements, like gymnastic flips or dance routines. Additionally, generating realistic interactions between objects, like how they bounce or react to each other, can be hit or miss. Even longer text prompts often result in “selective adherence” where the model follows only part of the instructions. If a model gets all three of these areas right, it’s rare for it to be open-sourced.

Alibaba’s WanXiang Approach

However, Alibaba’s WanXiang model takes a different approach. Not only does it capture complex actions like rotations, flips, and jumps, but it also reproduces realistic physical phenomena like collisions, rebounds, and cuts. It can even handle both English and Chinese long-form text prompts, interpreting them accurately and producing corresponding scene transitions and character interactions.

Let’s take a look at some of the official demos:

Demo 1: Diving Action

Prompt: A man performs a professional diving stunt from a platform. He is wearing red swim trunks and is inverted in mid-air, arms stretched, legs together. The camera angle shifts as he dives into the pool, causing a splash against the blue backdrop.

Demo 2: Equestrian Event

Prompt: A rider expertly guides their horse through a show-jumping course. The rider is focused, wearing professional gear, while the horse jumps smoothly, clearing each obstacle with impressive precision. The scene is dynamic and tense, with a natural outdoor background.

Demo 3: Cat Boxing Match

Prompt: Two anthropomorphic cats in boxing gear are engaged in an intense fight in a brightly lit ring. The scene captures fast movements, powerful punches, and vivid action details.

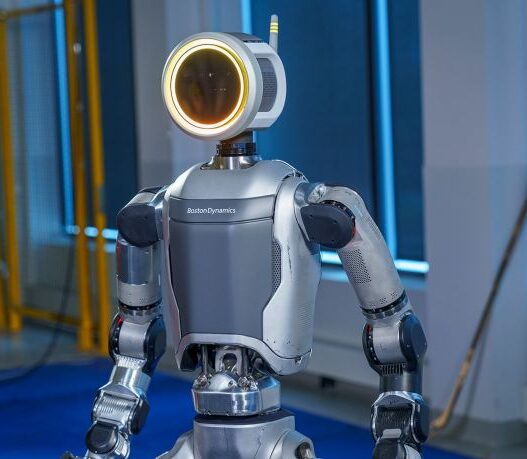

Practical Applications of WanXiang

You might wonder, what’s the point of an open-source video generation model if it can’t even run on typical hardware? Fortunately, Alibaba’s model comes in two versions: 14B and 1.3B parameters. The larger 14B version is built for high performance, but the 1.3B version is designed to run efficiently on consumer-level GPUs, like the 4090, with as little as 8.2GB of VRAM. Even so, it produces high-quality 480P video output, making it ideal for academic research and secondary model development.

The WanXiang model, even in its smaller form, demonstrates impressive results when tested on popular benchmark platforms like VBench. With a total score of 86.22%, it has outperformed other international video generation models, including Sora, HunyuanVideo, and Gen3.

Key Features of WanXiang

1. Text-to-Video Generation

The “text-to-video” feature is WanXiang’s standout capability. In simple terms, it can turn text prompts into high-quality videos. For example, with just a description, it can generate cinematic-level effects, special fonts, or even animated logos. This level of flexibility sets it apart from other models.

- Example: In a neon-lit cityscape, the word “Welcome” appears on a sign against a vibrant, cyberpunk backdrop.

2. Complex Motion Generation

Complex movement is often the hardest challenge for video generation models. Whether it’s spinning, jumping, or running, even minor missteps can ruin the realism. However, WanXiang has excelled in this area, handling movements with remarkable precision.

- Example 1: A basketball player jumps to make a shot. The model accurately captures the motion of the player, from the jump to the ball’s trajectory.

- Example 2: A clown walks past a burning van, his exaggerated movements and facial expressions captured with cinematic style.

3. Long-Text Instructions Compliance

WanXiang doesn’t just handle short, simple prompts. It can generate highly detailed scenes based on long text descriptions, maintaining consistency across multiple subjects and actions.

- Example: A lively party scene with diverse dancers, vibrant decorations, and a sense of festivity, captured in a wide-angle shot.

4. Physics Modeling

The model also impresses with its ability to simulate realistic physical interactions. For example, when a transparent glass of milk is tipped over, WanXiang accurately simulates the flow of the liquid and its surface tension.

- Example: A strawberry falls into a glass of water. The model captures the interaction between the fruit and the water, showcasing the physics of water droplets and the strawberry’s descent in vivid detail.

Core Technological Innovations

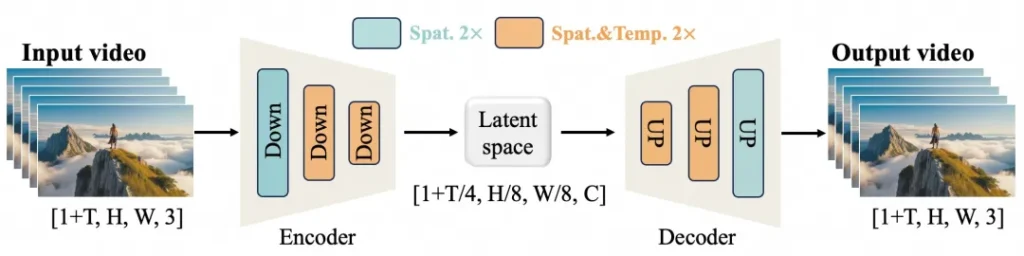

WanXiang’s powerful performance stems from two key innovations: the efficient causal 3D VAE (Variational Autoencoder) and the Video Diffusion Transformer (DiT).

1. Efficient Causal 3D VAE

Alibaba’s team designed a new 3D VAE architecture specifically tailored for video generation. This innovation allows for more efficient compression of time and space, reducing memory usage and ensuring temporal causality—important for maintaining the flow of events in a video.

2. Video Diffusion Transformer

WanXiang also utilizes the state-of-the-art Diffusion Transformer (DiT) architecture. This approach leverages full attention mechanisms to model long-term spatial and temporal dependencies. The model’s architecture, based on linear noise trajectories, helps it generate videos with consistent time-space alignment.

The Future of Open-Source Models

With this release, Alibaba’s WanXiang model has set a new standard for video generation, demonstrating that open-source models can outperform closed-source counterparts. Coupled with Alibaba’s other open-source efforts, such as the Qwen language models, this move marks a significant moment in AI development.

By open-sourcing these powerful models, Alibaba has positioned itself at the forefront of AI innovation. Their models, including WanXiang, are now available on platforms like GitHub, HuggingFace, and MoDa, supporting a wide range of use cases from academic research to commercial video production.

Conclusion

WanXiang’s release is a game-changer for the AI video generation landscape. With its ability to create detailed, high-quality videos from text prompts, simulate complex motion, and model realistic physics, it is poised to revolutionize industries such as advertising, film, and even gaming. Alibaba’s commitment to open-source AI is breaking down barriers and setting new standards for what’s possible in the world of video creation.