イランは「ユーラシアの架け橋」と呼ばれ、豊かな歴史、息をのむ建築物、深い文化的遺産を有する国です。世界初の法典であるハンムラビ法典や千夜一夜物語に登場する伝説の都市を擁し、時を超えた驚異に満ちた土地です。壮麗なペルシャモスクから古代ゾロアスター教の寺院まで、イランは数千年にわたる文明の旅を提供します。

イラン必見の観光スポット

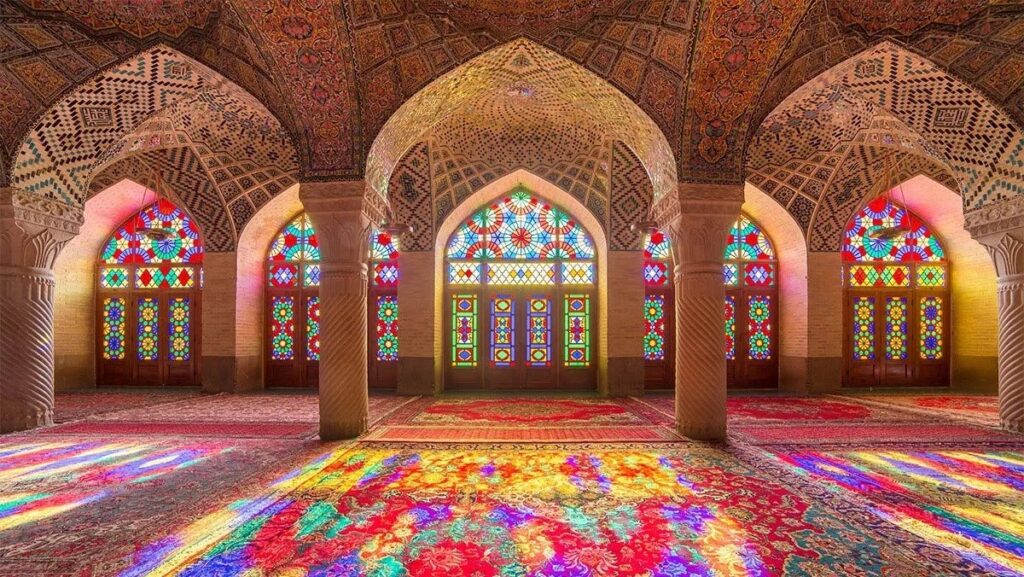

ナーシール・アルムルク・モスク(ピンクモスク)

シラーズにある「ピンクモスク」の愛称で親しまれるこのモスクは、色鮮やかなステンドグラスの窓で訪れる人々を魅了します。陽光が差し込むと、礼拝堂はカラフルな万華鏡へと変貌し、幻想的な雰囲気を創り出します。

ペルセポリス:栄光のペルシャ帝国

ユネスコ世界遺産に登録される古代都市ペルセポリスは、アケメネス朝の儀式の都でした。壮大な遺跡には精巧な彫刻と宮殿群が残り、ペルシャ文明の輝きを垣間見ることができます。

スィーオセポル橋(33アーチ橋)

サファヴィー朝時代の技術の傑作であるこの橋は、33のシンメトリカルなアーチが川面に美しく映え、特に夜間は幻想的な景観を作り出します。

ナクシェ・ジャハーン広場

イマーム広場としても知られるこの広場は世界第2位の大きさを誇り、壮麗なモスク群、バザール、王宮に囲まれたペルシャ文化と歴史の中心地です。

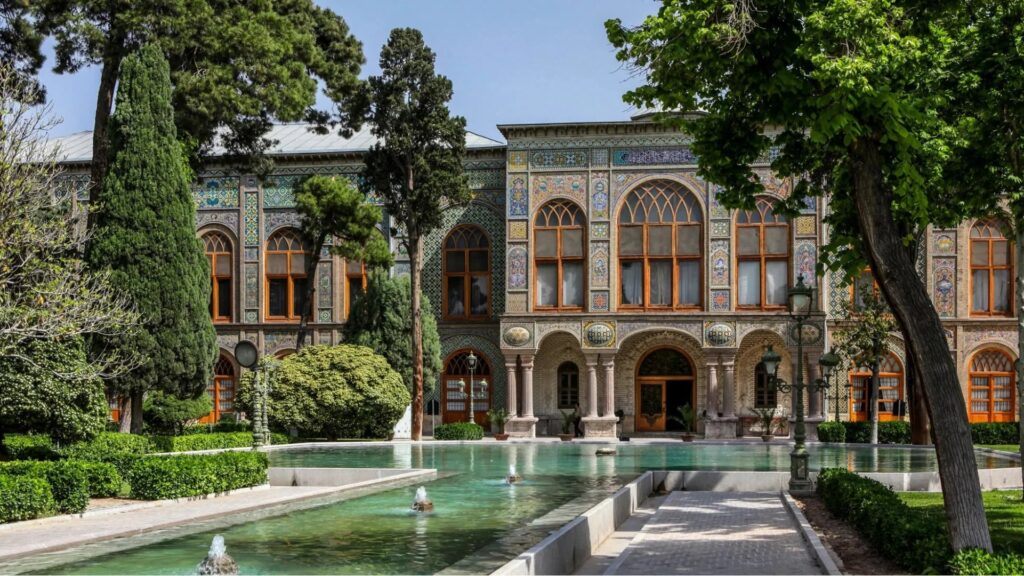

ゴレスタン宮殿

テヘランにあるユネスコ世界遺産の宮殿複合体で、ペルシャとヨーロッパの建築様式が見事に融合。鏡の間と精緻なタイル装飾がガージャール朝の栄華を物語ります。

イスファハン・ジャーメ・モスク

11世紀に建造されたイラン最古級のモスクで、各王朝によるイスラム建築の変遷を体現する歴史的宝庫です。

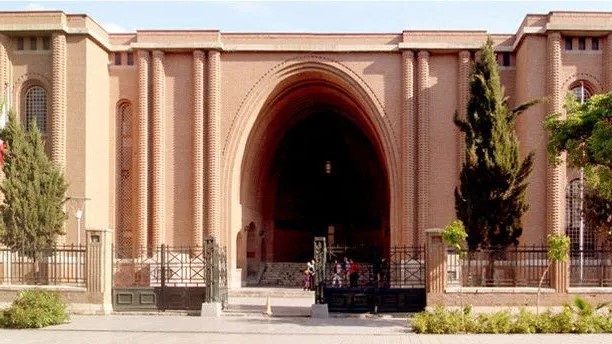

イラン国立博物館

古代ペルシャ、エラム、サーサーン朝時代の貴重な考古学遺物を収蔵する歴史愛好家必見の博物館です。

ペルシャ料理のおすすめ

ゴルメ・サブジ(ハーブシチュー)

新鮮なハーブと赤インゲン豆、羊肉を長時間煮込んだ国民的料理。サフランライスと共に供される家庭の味です。

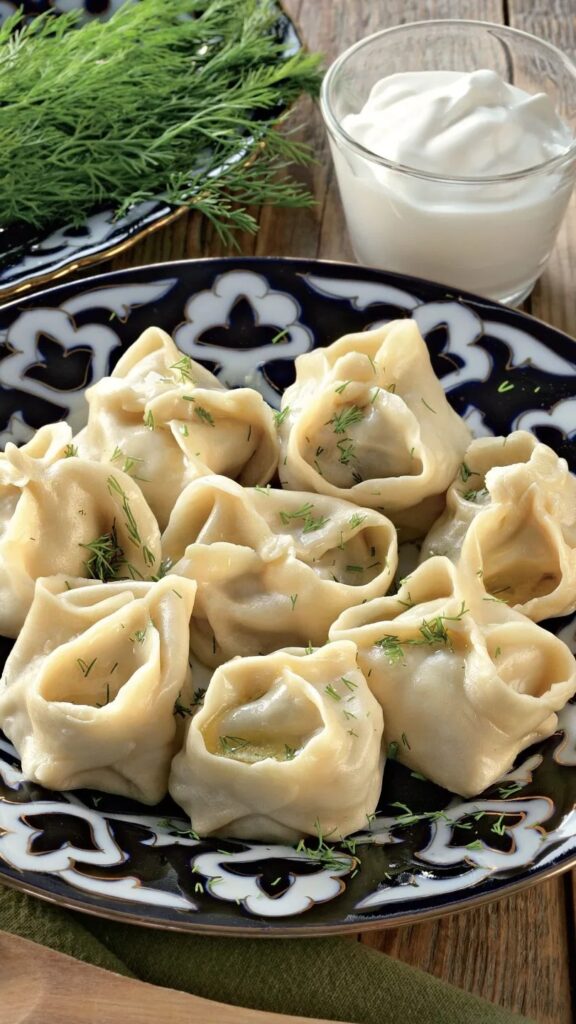

マントゥ(ペルシャ風ダンプリング)

薄皮にスパイス効かせたひき肉を包み、ヨーグルトソースとハーブをかけた中央アジア風料理のイラン版。

ラヴァシュ(伝統的平焼きパン)

ケバブやチーズ、野菜を包んで食べる薄型の伝統パン。全ての食事に欠かせません。

バーデムジャン(ナスシチュー)

トマトベースのシチューに柔らかく煮込んだナスと肉、ペルシャスパイスが絶妙に調和した甘酸っぱい味わい。

ドゥーグ(ヨーグルトドリンク)

ミントと塩味の爽やかな発酵飲料。暑い日の水分補給に最適です。

ハルヴァ(ごま菓子)

ごま、蜂蜜、小麦粉で作る伝統菓子。口どけの良い食感で、ペルシャ茶と共に楽しむ午後の定番です。

ターディグ(黄金のご飯のおこげ)

サフランとバターで香り付けされたカリカリのご飯の底部分。ペルシャ料理の華です。

クービデ(ペルシャ風ケバブ)

玉ねぎとサフランで味付けしたジューシーなひき肉ケバブ。イランを代表するグルメです。

ベストシーズン

大陸性気候のイランでは夏季は酷暑、冬季は厳寒となります。おすすめの訪問時期:

- 春(3月~5月):温暖な気候で遺跡や庭園巡りに最適

- 秋(9月~11月):観光と砂漠ツアーに適した過ごしやすい季節

- 冬(12月~2月):アルボルズ山脈でのスキーが楽しめる

- 夏(6月~8月):カスピ海リゾートや北部がおすすめ(南部は酷暑)